By David Calvert, January 2017

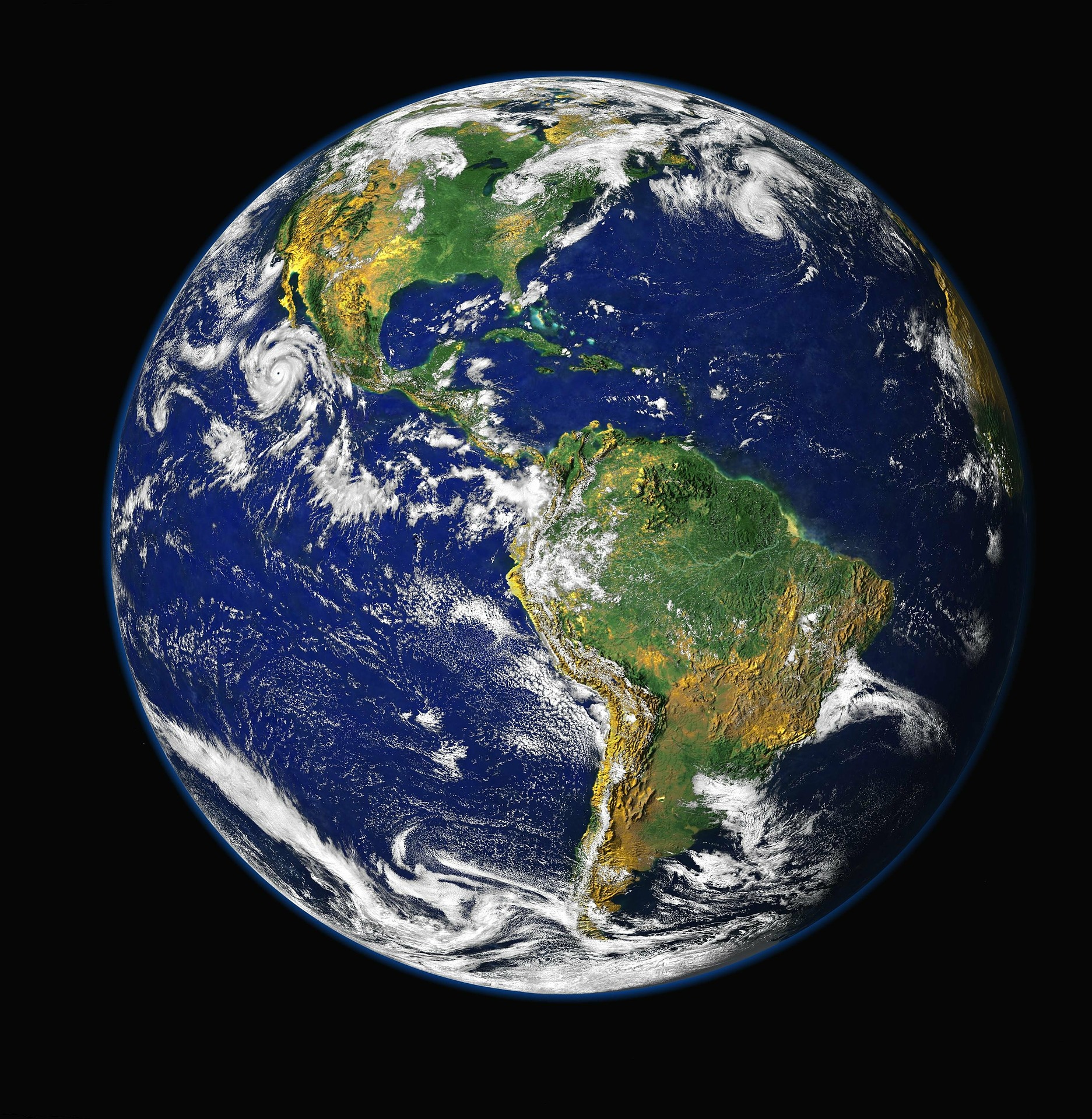

The political turmoil of last year including Brexit and the election of Donald Trump to be the 45th President of the United States made for a lot of speculation as to the impact of these dramatic changes and of course has provided satire shows with material for at least the next four years.

As 2017 starts though we can start to see some of the realities of these decisions and this made me wonder about how this could impact on the work of formulators. The regulatory landscape is key in driving many of the new formulations, whether these be lowering volatile organic compounds (VOC) emissions, restricting the use of certain chemicals due to REACh regulations or developing formulations with a lower carbon footprint.

This latter is not necessarily driven by specific regulations but more responding to initiatives like the various Climate Change Agreements such as Kyoto or Paris. In some cases the move towards lowering carbon footprint in its various guises is driven by a desire to demonstrate Corporate Social Responsibility (CSR). Some of the major FMCG companies such as RB, Proctor and Gamble and Unilever have placed sustainability high on their agenda.

In their 2015 Sustainability Report RB have a target going forward to reduce the CO2 footprint of their products by one third by 2020 and reduce the CO2 emissions from their manufacturing by 40% before 2020.

P&G have a similar commitment and in their 2015 sustainability objectives state that they are focusing their efforts in the following areas:

“(1) Reducing the intensity of greenhouse gas emissions (GHG) from our own operations through:

Driving energy efficiency measures throughout our facilities

Transitioning fuel sources toward cleaner alternatives

Driving more energy-efficient modes of transporting finished products to our customers

(2) Helping consumers to reduce their own GHG emissions through the use of our products via:

Product and packaging innovations that enable more efficient consumer product use and energy consumption

Consumer education to reduce GHG emissions such as the benefits of using cold water for machine washing

(3) Partnering with external stakeholders to reduce greenhouse gas emissions in our supply chain, including:

Ensuring our sourcing of renewable commodities does not contribute to deforestation

Developing renewable material replacements for petroleum derived raw materials”

Unilever launched their Sustainable Living Plan in 2010 as their blueprint for sustainable business and have stated that they wish to decouple their growth from their environmental impact. As part of this, they are aiming to reduce their environmental impact by 50% by 2030 and this includes a target to be carbon positive in manufacturing by 2030 by saving 1 million tonnes of CO2.

So how will all of this be changed by the potential appointment of Scott Pruitt to be the new head of the Environmental Protection Agency (EPA) in the USA? For those of you who are not aware of Scott Pruitt, he has served as Oklahoma’s attorney general since 2011 and is presently representing the state in a lawsuit against the EPA to halt the clean power plan. Pruitt is a well-known climate change sceptic who has cast doubt on the evidence that human activity is causing the planet to warm. At time of writing the confirmation hearing for Scott Pruitt has just taken place and barring some startling revelation it is likely he will become the new EPA head. We will have to see if he does introduce measures to reduce restriction on fossil fuels in the US and whether some of the companies change their approach to sustainability. My own personal view is that the approach of the companies mentioned will not change but perhaps the pace of change will be reduced somewhat and focus outside of the US.

If the EPA then introduces measures which “relax” regulation on other emissions such as those to water, will this mean that agrochemical formulations will be easier to develop? Is this good news for formulators? A new approach to some of the pressure groups who cast doubt on any scientific findings may well be refreshing if the pressure to remove some agrochemical actives from the market is reduced but we will have to wait and see. On a similar vein, the former Chief Executive of the Crop Protection Association in the UK stated in a letter to the Financial Times in December 2016 that he hoped the UK would “lead the way in striking a sensible balance between protecting and enhancing the environment and at the same time, supporting UK farmers to provide a healthy, safe, reliable and affordable food supply”

Staying with Brexit, I feel that change will be slower and any impact will be unlikely to be seen until at least two years after Article 50 is triggered. There remains the possibility that the UK’s exit could lead to yet more regulations for formulators to comply with, although it is hoped that common sense will prevail and the majority of the European regulations will simply be “ported over”. Putting the case forward for the UK to change the regulatory landscape the President of the British Crop Protection Council argued this month for the UK post-Brexit to move closer to the US EPA risk-based approach. He argued for the removal of the “EU’s unscientific hazard based assessments and the associated Candidates for Substitution and Comparative assessment processes”.

Whether the UK can afford to implement its own new regulatory approach may be more is another question and the whole issue may be decided more by politics than the practical aspects.

I guess my conclusion from compiling this article is that formulators – like everyone else – will be affected by the political turmoil of 2016 but how and whether this is positive cannot be determined as yet. All we can do as they say is “wait and see” and be quick to adapt once clarity is there, if that is ever the case!